IEEE 754

January 07, 2020

Here at KingsDS I am sometimes asked to help translate a science project from its original language (Fortran or C or Python for example) to Javascript. A key step in that translation is verifying that the results are the same between the two translations given the same input data. Often this is done by a simple RMSE (Wikipedia:RMSE) between inputs and outputs. We don’t expect that to be zero of course because there may be some small differences in floating point representation / math between the two languages right?

Right?

It turns out that the answer hinges on which operations we’re performing in the calculation. Any chip that implements IEEE 754 (that’s most of them) is guaranteed to produce exactly the same output given the same two inputs for a number of basic operations (addition, multiplication, etc) but for a number of non-elementary operations the result is not guaranteed (exponentiation being an important example). For a full list of which operations are required and which are recommended by the standard you’d have to (pay for and) read it, but Wikipedia gives a decent summary: wikipedia.org/wiki/IEEE_754.

So that’s the short answer to a short question. Now I’d like to turn attention to a case study (specifically, the case that brought all of this to my desk and made me want to write up a blog post) where we got different results depending on language and hardware and how we decided to make it possible using DCP to guarantee bitwise accuracy for a calculation.

Case Study

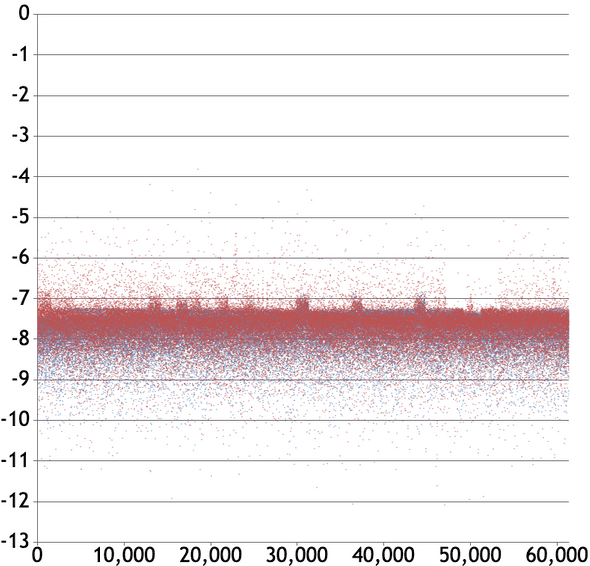

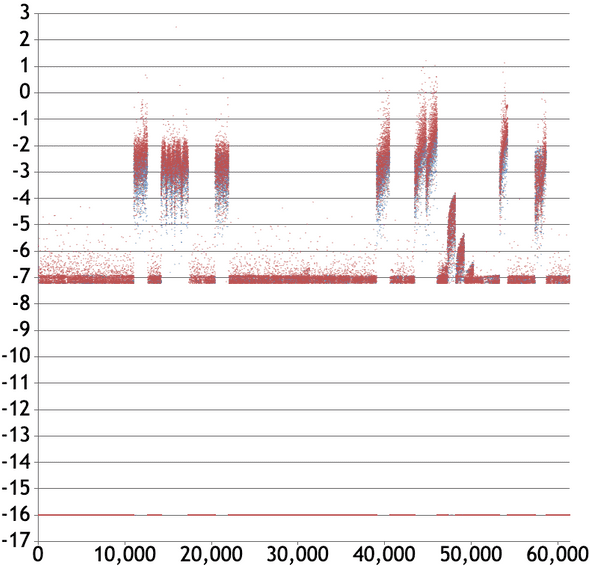

I won’t get into the actual physics being calculated here but suffice it to say that the data is divided up into “frames”, each of which contains tens of thousands of pairs. There were two frames which had particularly high RMSE values when comparing the output of the Python and JS versions on the same machine (Intel processor). We can visualize the differences with a plot like this (where the color of the dot indicates if it corresponds to or , but they ended up being nearly the same so I don’t recall which was which).

The vertical axis is

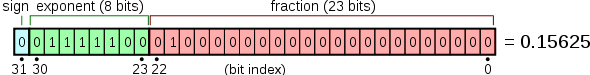

where is the “ground truth” result and is the new result. That formula roughly gives the (negative of the) number of digits which the two results have in common. The plot above is pretty typical and indicates that on average the answers match in the first 8 digits and differ in the last 8 of the mantissa (sometimes called the fraction as in the below chart to remind you how floats are represented in binary).

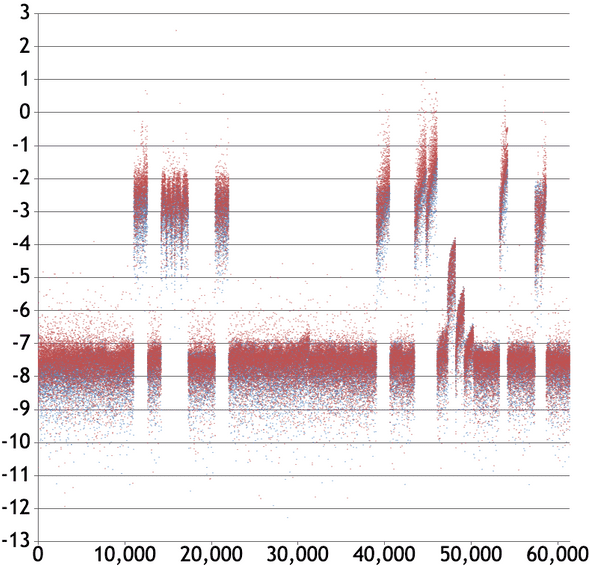

Now, let’s take a look at the same sort of plot only this time “ground truth” will be our Python results on an Intel processor and the “new result” will be our Javascript results on the same processor.

Those huge spikes are what put the RMSE over my personal limit and started this investigation. The plot indicates that for some values the Javascript result shares no digits in common with the Python result (you’ll have to take my word for it that they were on the same order of magnitude, otherwise this measure isn’t very useful).

When I investigated those points and traced down exactly where the results started to diverge I found a calculation with lots of exp and pow which was in a self-updating loop of the form

while (error > tolerance) {

[input, error] = f(input)

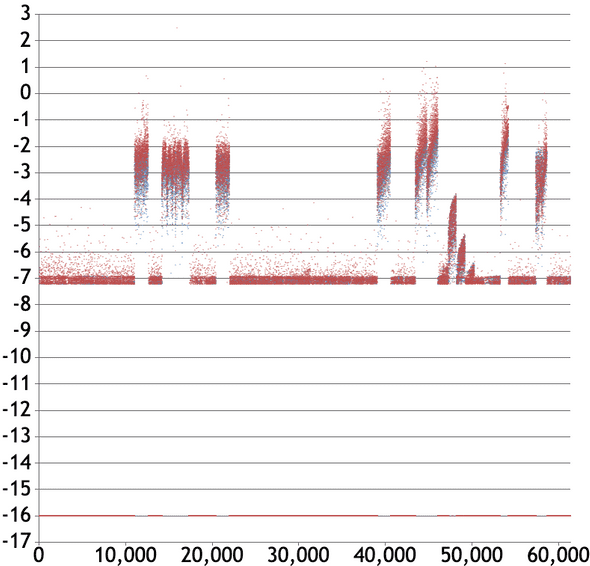

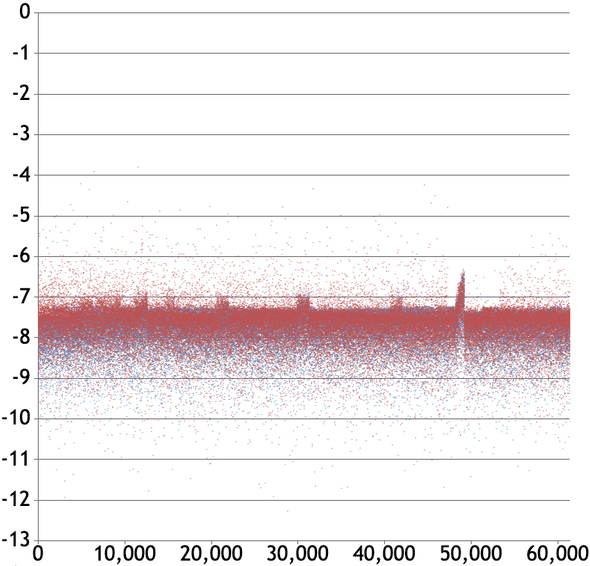

}There was a point where the difference between the python exp and the Javascript exp varied by one bit, and that caused another internal condition which was looking for var1 === var2 to be true in the Python version just a few iterations before it was true in the JS version. This is the point where I began to learn more about implementations of exp and could produce the summary this blog post opens with. With that knowledge in mind the next thing that I wanted to see is how does Python compare to itself on different processors. I happened to have a Linux box available with an AMD chip inside and a RaspberryPi with an ARM chip. Here’s how they compare to the Intel results:

(Sidenote: when a === b we would get -Infinity in the above, so that gets caught and we set it to -16 to keep with this “number of digits” feel. So we see a line on the bottom there for all the points that were the same.)

So that confirms that while these changes are pretty large, for this application they are within the normal expected hardware-to-hardware variations and do not indicate a mistranslation.

Just for fun, let’s do a comparison of Javascript on different machines. First let’s compare the Python AMD results to Javascript on Intel results:

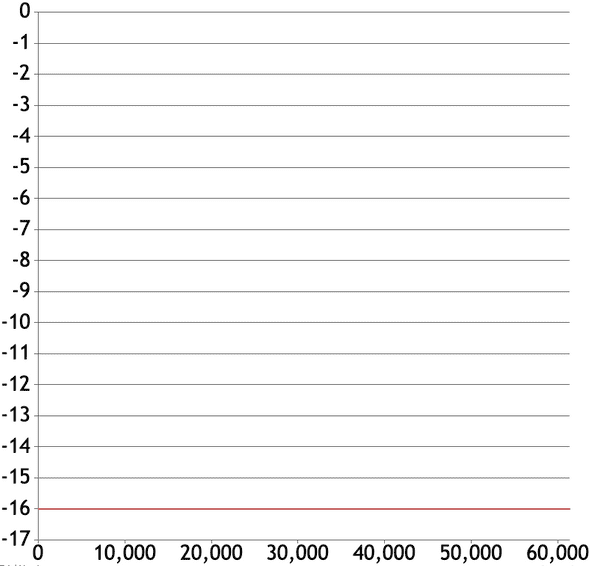

Wow! This indicates that the JS results on Intel are pretty similar to the Python results on AMD (relative to the -8 baseline, we don’t have any -16s). How about JS between Intel and AMD?

Interesting! Why is Python different on different machines but Javascript is the same?

FDLIBM

As it turns out, Chrome and Firefox (at the time of this writing) use the same non-native library for implementing exponentials, sine, cosine etc. That library is called fdlibm, from the top-level comments:

FDLIBM (Freely Distributable LIBM) is a C math library

for machines that support IEEE 754 floating-point arithmetic.

In this release, only double precision is supported.

FDLIBM is intended to provide a reasonably portable

..., reference quality (below one ulp for

major functions like sin,cos,exp,log) math library.This is not by coincidence! It is recommended by the official ECMAScript Language Specification

Conclusion

In light of the above, the question is not “Does my Javascript translation produce identical results to the original?” It becomes “Does my Javascript vary from the original within the tolerance that it varies from itself on different machines? While the Javascript results do vary from the Python results on the same architecture (Intel) the variance is quite similar when compared to how Python results vary across architectures (AMD). Since Javascript uses a non-native math library for transcendental functions and their ilk, regardless of architecture, we can guarantee bitwise identical results as long as the Javascript interpreter uses fdlibm under the hood. Since this might be useful to some DCP developers, we plan to provide an fdlibm flag in the future which will only run jobs in sandboxes that use fdlibm.

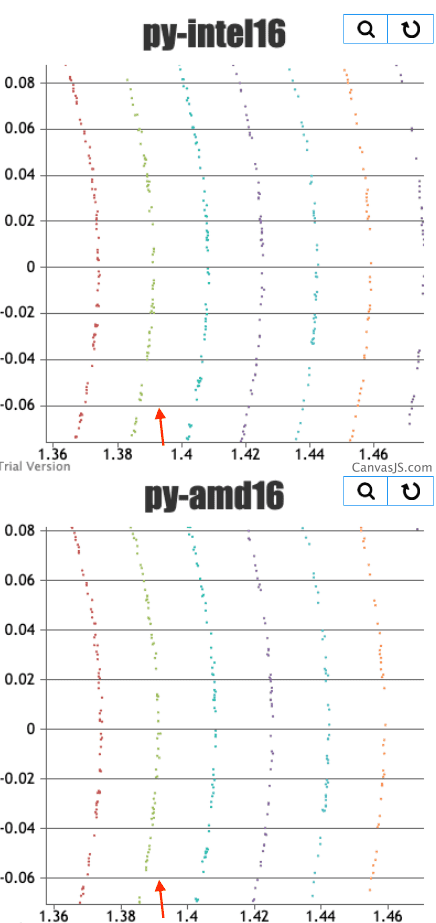

So what about the differences what can/should we do if we want more similar results without fixing the underlying library? That depends on the application. The algorithm may need to be changed to be less sensitive to exact differences. You may need to use interval arithmetic. In this specific case, the final results are not numerically different in a way that matters to the application. Here’s a zoomed in scatter plot of some of those 10s of thousands of points with red arrows pointing out the largest spike I found:

In some applications this could be very important, but in this one it turns out to be sufficiently consistent.